Currently, a popular component of Cloud Data Platform Architectures is a Data Lake. If you are curious about the implementation and services for a Data Lake with AWS, have a look at those blogposts. An architecture which provides transparency about the Data in the Data Lake and makes it smoothly available for further analytics in a Data Warehouse, is called a Data Lakehouse. Click here.

As we focus on specific customer projects, reoccurring objectives are of concern in setting up a Data Lakehouse. Hence, when setting up a Data Lakehouse from scratch, data protection requirements must be considered. Because of that, we want to cover the different aspects of data protection in this blog series. The basics of what must be considered for data protection are described by GDPR. This includes the protection of data access, the protection of personal identifiable information, the right of customers to know which data is saved about them and their right to have their data deleted again. In this first part of the series, the focus will be to ensure that PII data can be treated separately.

Make it a general framework!

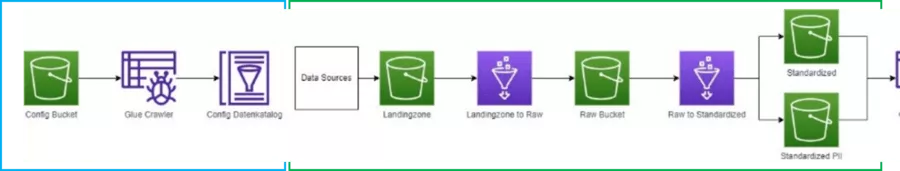

Here, we want to describe our architecture framework for the Data Lake part of a Lakehouse on AWS, which automatically catalogs data incoming to the Data Lake and makes it readily available to harvest valuable insights. It is implemented as a generalized framework. Therefore, for customer projects an out of the box fully running Lakehouse can be set up swiftly and well structured. Still, our framework is based on the services one would typically use for an AWS Data Lakehouse and such that customers can leverage any AWS Data & Analytics Know-How that they might have already acquired. We deploy S3 Buckets for the different Data Lake layers, glue crawlers to create data catalogs and glue jobs to push the data along the pipeline and through the Data Lake Layers. All of this is implemented with AWS Infrastructure as Code option CDK (Cloud Development Kit).

But how does it work?

The diagram gives an overview of our Data Lake framework. In the first step (blue box), a data sample for a use case is loaded to the s3 config bucket. This bucket contains the files providing the base for the configuration information. A glue crawler is started as a new file arrives to the bucket. Thereby, our Config Data Catalog is populated with the schemas, tables and columns for the Lakehouse. For each file which is added, one table is created in the Config Data Catalog. The next step is the only individual, manual step. The different columns in the Config Data Catalog are the central location to define Metadata such as primary keys, PII Data or business keys (check out the screenshot below).

We will benefit from this Metadata once we get to the ETL Process.

Now, as data arrives to the landing zone bucket, a trigger will start the framework’s data pipeline to process the file through the Data Lake layers. In our first implementation, only the batch processing of csv files has been implemented, but the framework can be extended to other file and ingestion types.

As the first step of the data pipeline a glue ETL script is started to transfer the files to the Raw Bucket. We implemented the automatic trigger as an AWS Lambda function. But another good, recent option on AWS to start the pipeline are Glue Workflows. Now, the Metadata from the Config Data Catalog, such as column names and which columns are PII data, is used for the necessary file specific variables in the ETL script. As part of this processing step, the data is enriched, e.g. by a loading timestamp, and transformed to parquet, a columnar file format, to efficiently store data in the Data Lake.

As data arrives to the Raw bucket, a second Glue Job is triggered by a Lambda function. In this step, the Metadata from the Config Data Catalog is applied to separate PII and Non-PII Data. As we developed the framework, we chose to handle PII-data by separating the data into two standardized buckets, one with PII and one with non-PII Data.

As the final step, glue crawlers for the standardized buckets are started. This finalizes the standardized data layer. Now, you can easily create all the data analysis and insights you can imagine. Further, if additional Data Model Layers are needed, those can easily be connected.

Can we make it even better?

To achieve even higher data protection standards, the framework will be enhanced so that PII Data will be encrypted in the upcoming development stages. Encryption would add another layer of security as no PII information are stored unencrypted and unauthorized persons would not only have to gain access to the S3 buckets but also need to obtain the encryption key to access the data. Further, AWS services such as Macie can be integrated to continuously monitor the incoming data and to automatically detect which columns contain PII Data and are not treated as such, yet.

Now, as the Data is in appropriate buckets, you want to learn how to easily administer who has access to which data with AWS Lake Formation in the next part of our blog series.